Artificial intelligence has promised to accelerate everything — from coding to cancer research. But in May 2025, that promise hit a very public roadblock.

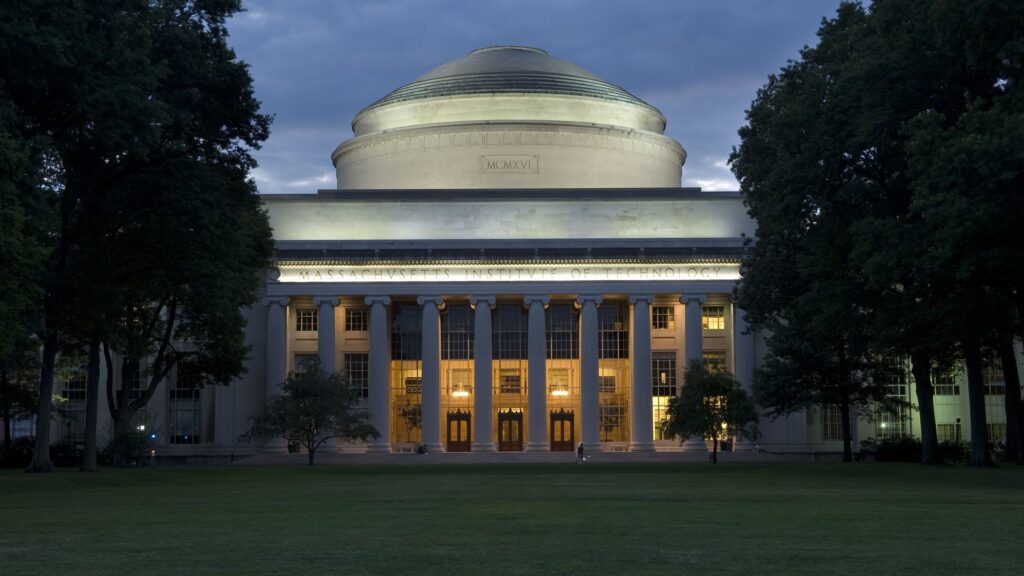

The MIT AI study, once praised for claiming AI tools boosted scientific discovery in real-world labs, has now been officially disavowed by MIT itself.

The university stated it had “no confidence in the provenance, reliability or validity of the data” and asked that the paper be withdrawn from public discourse. The reason? Questions over how the AI system functioned, how the results were reported, and whether the data used was even real.

This academic scandal — unfolding at one of the world’s most respected research institutions — is more than just a headline. It’s a flashing warning sign for the future of AI credibility.

Here are five critical lessons from the MIT AI study controversy.

1. AI Research Must Meet the Same Scientific Standards as Any Other Field

The now-discredited study, authored by MIT doctoral student Aidan Toner-Rodgers, claimed AI tools used in a materials science lab led to measurable increases in new discoveries and patents.

That claim made headlines. But behind it, critics noticed:

- Missing details about the AI tool’s capabilities

- Inconsistent data points

- Lack of reproducibility

AI research, especially in academia, must follow strict data validation, peer review, and transparent methodology. Hype cannot replace hard science.

2. Institutional Endorsement Is Not Infallible

The paper had support from high-profile economists like Nobel laureate Daron Acemoglu and David Autor, who believed it showcased how AI could complement human scientists.

But MIT’s post-review statement clarified that:

“The Institute has no confidence in the veracity of the research contained in the paper.”

Even world-class institutions and experts can be misled when rigorous validation steps are skipped.

3. Preprints and Peer Pressure Are a Dangerous Combo

The paper was initially uploaded to arXiv, the preprint server widely used in academia. This allowed it to gain traction before undergoing peer review — a trend becoming more common in the AI world.

Social media and publication buzz can create pressure to treat preprints as verified facts. The MIT AI study collapse proves this is risky, especially in high-stakes fields like scientific discovery.

4. Scientific Reputation Can Be Damaged by One Bad Paper

MIT’s withdrawal from the study didn’t just affect one author. It caused:

- Public questioning of the lab involved

- Backlash against MIT’s internal review process

- Headlines that suggest AI research is unreliable

This backlash hurts not just one study — it erodes public trust in AI research at large.

5. The AI Hype Cycle Needs a Reality Check

The MIT AI study claimed dramatic productivity improvements using an AI tool. But the incident exposes how overstating AI’s real-world capability can backfire.

Whether you’re in academia, government, or the private sector, the lesson is clear:

Every AI claim must be verifiable, reproducible, and open to scrutiny.

We’re entering a world where AI is writing code, designing proteins, and co-authoring research papers. The bar for trust must be raised — not lowered.

.

🧠 So What Does the MIT AI Controversy Really Teach Us?

It teaches us that:

- AI tools aren’t magic bullets

- Scientific claims must remain falsifiable and transparent

- Institutions must publish retractions as aggressively as breakthroughs

- Academic credibility depends on open review, not closed prestige

This incident is a major wake-up call, especially as AI-generated research becomes more common.

3 thoughts on “MIT AI Study Collapse: 5 Critical Lessons from the Disavowed Discovery Paper”